The False Logic Problem

In Python:

if score >= 90:

print("A")

else:

print("Try again")This is real logic. The machine checks a condition, selects a branch, and executes an outcome. Same input, same result—every time. That’s what it means to evaluate logic explicitly.

Now try this: ask ChatGPT,

"If my test score is 92, what grade did I get?"

It might say “A.” That sounds right. But here’s what actually happened:

No condition was evaluated. No rule was applied.

The model simply predicted what text usually follows that kind of question.

It looks like logic.

It sounds like logic.

But it’s just pattern matching—an illusion of reasoning.

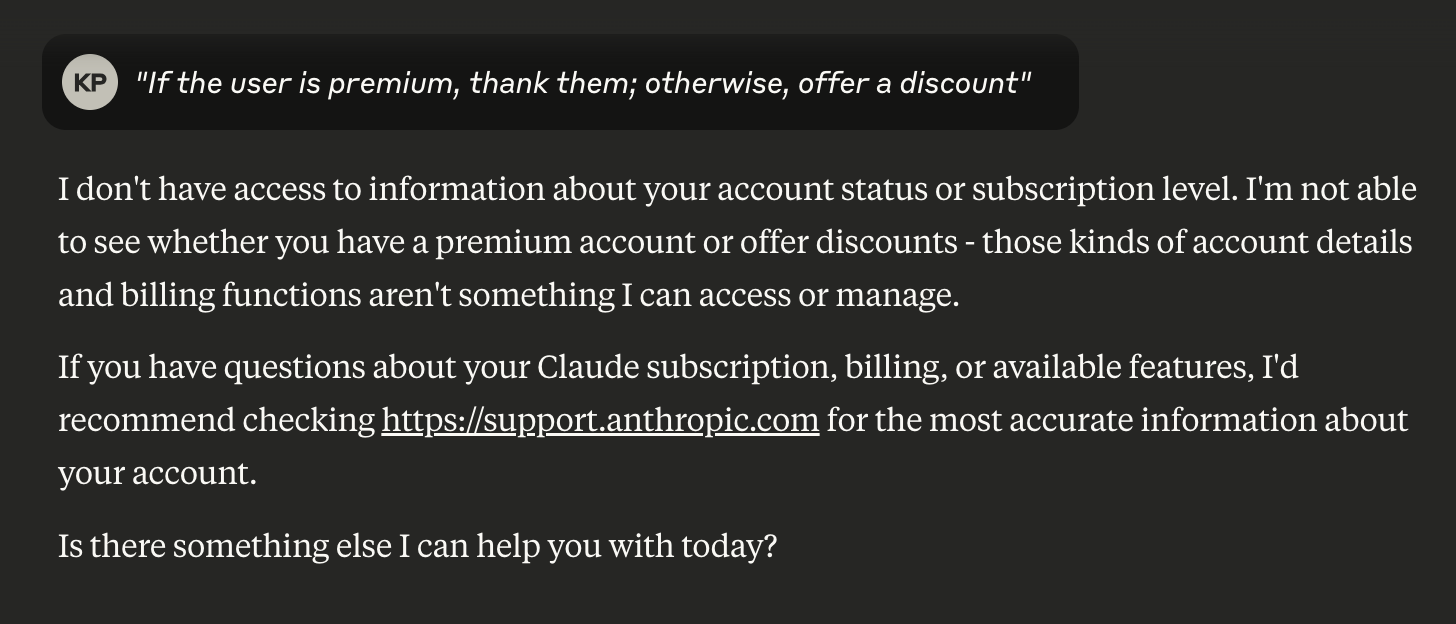

Another example:

Why This Matters in Your Classroom

Students need to distinguish between systems that execute logic and systems that mimic the appearance of logic.

Try this:

Show students the Python

if/elseblock aboveShow them an AI response to a similar logical prompt

Ask: "Which one actually followed conditional reasoning? Which one just predicted what should come next?"

This isn't about teaching students to distrust AI. It's about teaching them to recognize fundamentally different types of thinking—one that follows rules, one that follows patterns.

In a world where AI increasingly resembles human reasoning, this distinction isn't just useful. It's essential.